This is the second post in a series on Deis Workflow, the second major release of the Deis PaaS. Workflow builds on Kubernetes and Docker to provide a lightweight PaaS with a Heroku-inspired workflow.

In part one, we gave a brief conceptual overview, including Twelve-Factor apps, Docker, Kubernetes, Workflow applications, the “build, release, run” cycle, and backing services. We also explained the benefits of Workflow. In summary:

Workflow is fast and easy to use. You can deploy anything you like. Release are versioned and rollbacks are simple. You can scale up and down effortlessly. And it is 100% open source, using the latest distributed systems technology.

In this post, we’ll take a look at the architecture of Workflow and how Workflow is composed from multiple, independent components.

Deis Workflow is built using a service oriented architecture. All components are published as a set of container images which can be deployed to any Kubernetes cluster.

Overview

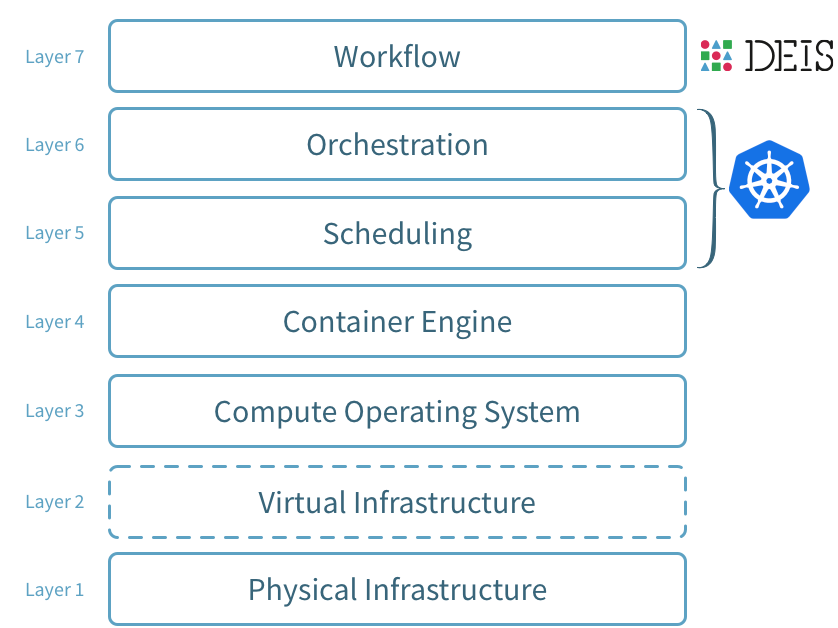

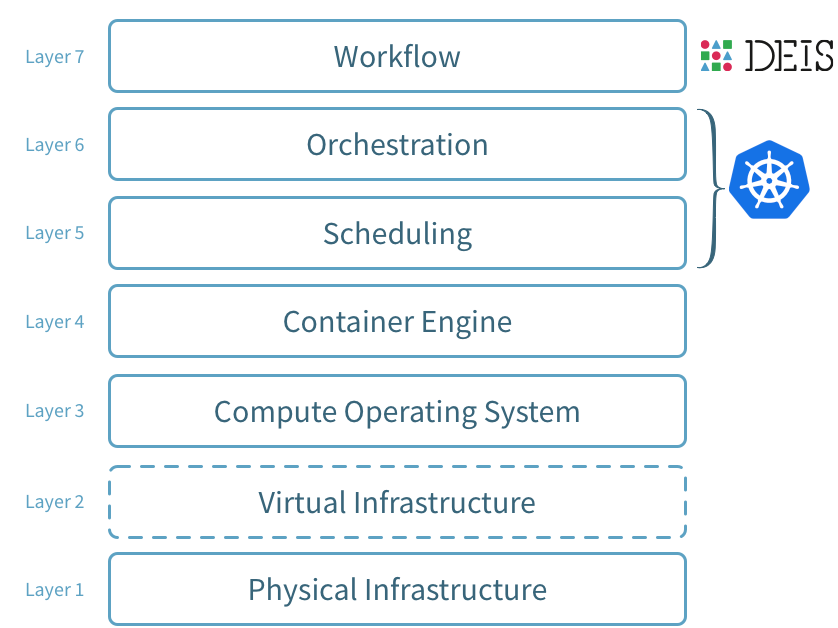

Deis Workflow sits at the top of your stack:

Operators can use Helm Classic to configure and install the Workflow components which interface directly with the underlying Kubernetes cluster.

Service discovery, container availability, and networking are all delegated to Kubernetes, while Workflow provides a clean and simple developer experience.

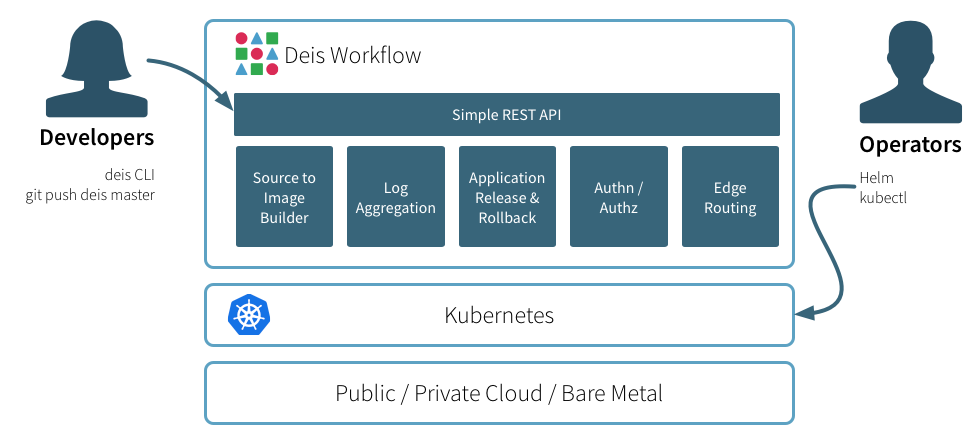

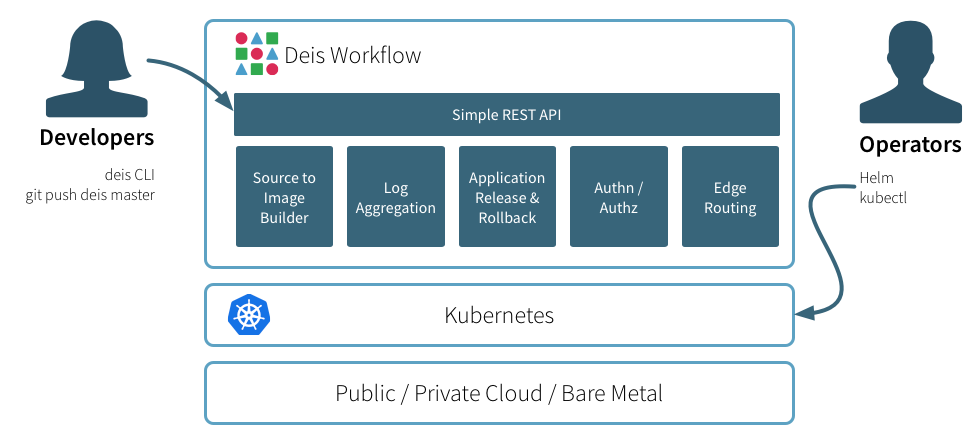

Workflow provides additional functionality on top of your Kubernetes cluster:

In detail, these are:

Check out the components docs for more.

Kubernetes Native

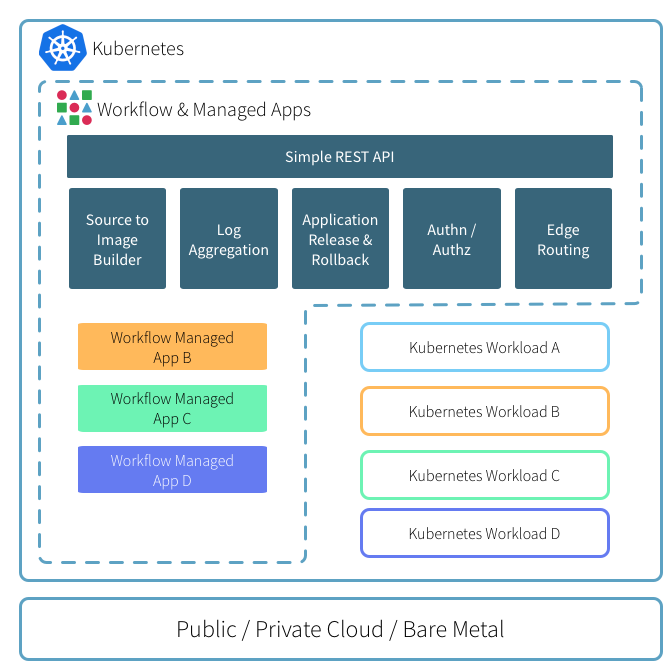

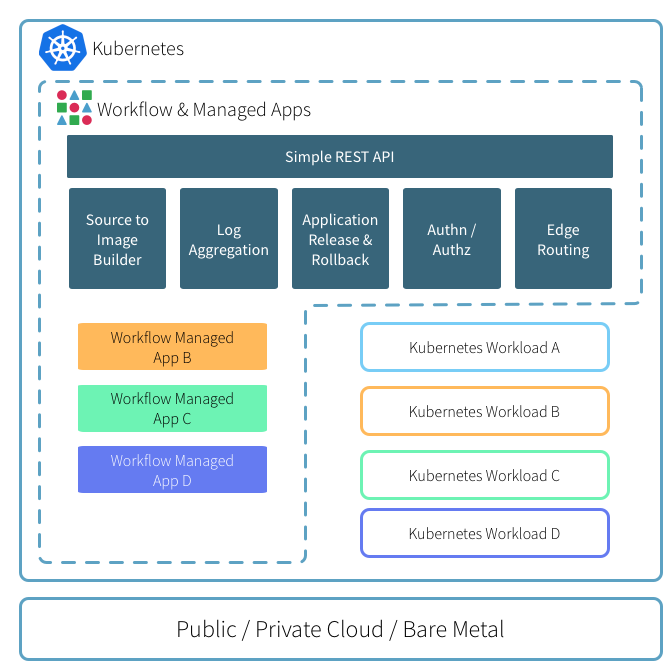

All platform components and applications deployed via Workflow expect to be running on an existing Kubernetes cluster.

This means that you can happily run your Kubernetes-native workloads next to applications that are managed through Deis Workflow.

Here’s how you might partition a Kubernetes cluster:

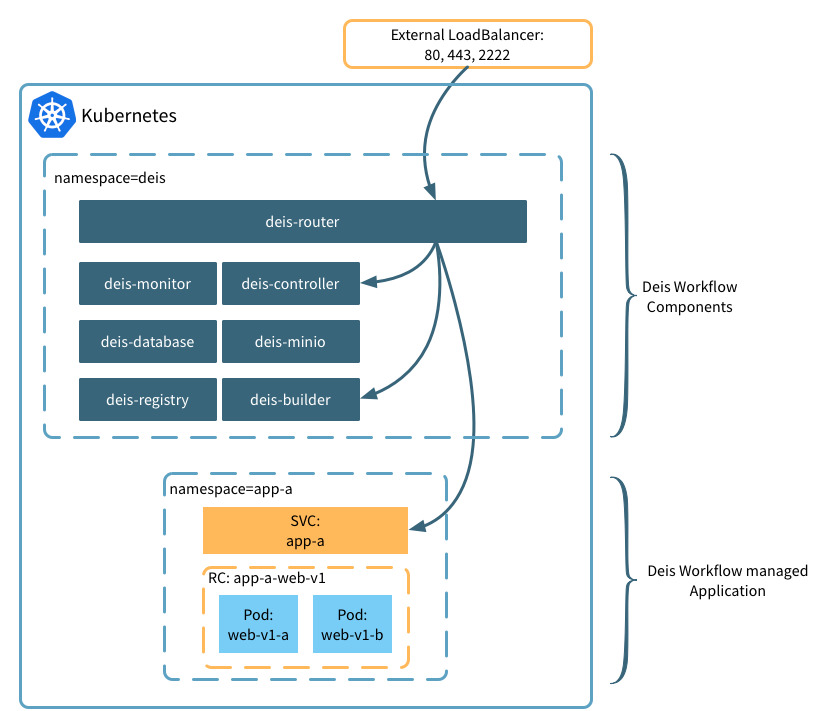

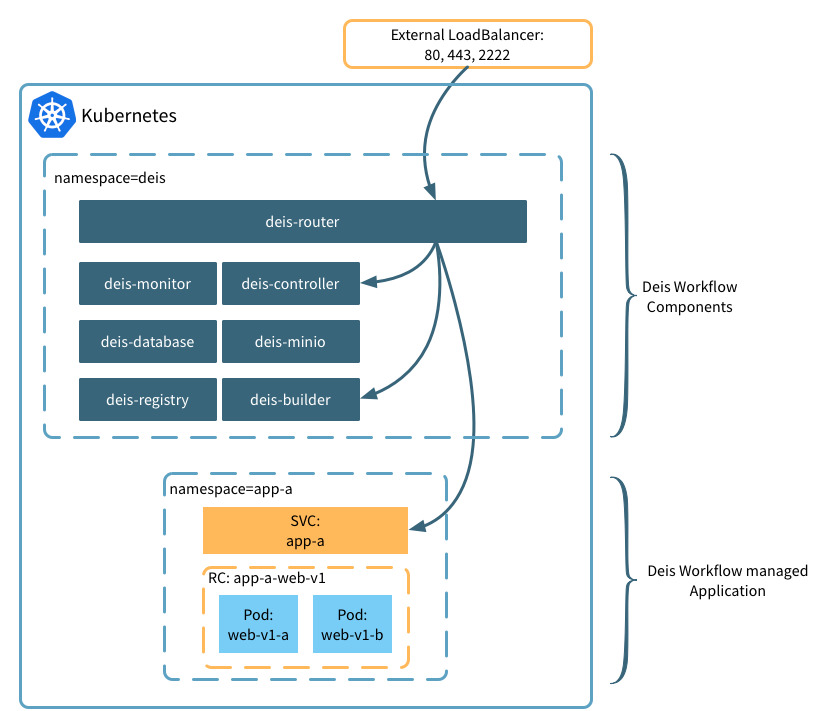

Application Layout and Edge Routing

By default Workflow creates per-application namespaces and services so you can easily connect your applications to other on-cluster services with standard Kubernetes mechanisms. For example:

The router component is responsible for routing HTTP(S) traffic to your applications as well as proxying git push and platform API traffic.

By default, the router component is deployed as a Kubernetes service with type LoadBalancer. Depending on your configuration, this will provision a cloud-native load balancer automatically.

The router automatically discovers routable applications, SSL/TLS certificates, and application-specific configurations through the use of Kubernetes annotations. And any changes to router configuration or certificates are applied within seconds.

Topologies

Deis Workflow does not dictate a specific topology or server count for your deployment anymore, which was the case when setting up version one of the Deis PaaS.

Workflow platform components will happily run on single-server Kubernetes configurations as well as multi-server production Kubernetes clusters.

Workflow is comprised of a number of small, independent services that combine to create a distributed PaaS. All Workflow components are deployed as services (and associated controllers) in your Kubernetes cluster.

All Workflow components are built with composability in mind. If you need to customize one of the components for your specific deployment or need the functionality (divorced from Workflow proper) in your own project, we invite you to give it a shot!

Controller

The controller component is an HTTP API server which serves as the endpoint for the deis CLI. The controller performs management functions for the platform, as well as interfacing with your Kubernetes cluster.

The controller persists all of its data to the database component.

Database

The database component is a managed instance of PostgreSQL, and holds a majority of the platform state. Backups and WAL files are pushed to Workflow’s object storage via WAL-E. When the database is restarted, backups are fetched and replayed from object storage so no data is lost.

Builder

The builder component is responsible for accepting code pushes via Git and managing the build process of your application.

The builder process:

- Receives incoming git push requests over SSH

- Authenticates the user via SSH key

- Authorizes the user’s access to push code to the application

- Starts the application build phase

- Triggers a new release via the controller

Builder currently supports both buildpack and Dockerfile based builds.

For buildpack deploys, the builder component will launch a one-shot pod in the deis namespace. This pod runs the slugbuilder component which handles default and custom buildpacks.

The compiled application is a slug, consisting of your application code and all of its dependencies, as determined by the buildpack. The slug is pushed to the cluster-configured object storage for later execution.

For Dockerfile applications (i.e. apps with a Dockerfile in the root of the repository) builder will instead launch the dockerbuilder component to package your application.

Instead of generating a slug, dockerbuilder generates a Docker image (using the underlying Docker engine). The completed image is pushed to the managed Workflow Docker registry. For more information, see the docs on using Dockerfiles.

Slugrunner

The slugrunner component is responsible for executing buildpack applications. Slugrunner receives slug information from the controller and downloads the application slug from the Workflow’s object storage before launching your application processes.

Object Storage

Some Workflow components ship their persistent data to cluster-level object storage.

Currently, three components use Object Storage:

- The database stores its WAL files

- The registry stores Docker images

- The slugbuilder stores slugs

Workflow ships with Minio by default which is not configured for persistence, and provides in-cluster, ephemeral object storage. Hence: if the Minio server crashes, all data will be lost and cannot be regenerated. We recommend you use this for dev environments only.

If you feel comfortable using Kubernetes persistent volumes you may configure Minio to use the persistent storage available in your environment. However, if you tie Minio to your Kubernetes persistent storage, your Workflow object storage is now dependant on that volume being available, not having network issues, and so on.

Workflow also supports off-cluster storage, which should be more reliable, and this is our recommended production deployment configuration. Off-cluster object storage currently supports Google Cloud Storage, AWS S3, and Azure Storage.

Registry

The registry component is a managed Docker registry which holds application images generated by the builder component. The registry persists Docker images to Workflow object storage.

Router

The router component is based on nginx and is responsible for routing inbound HTTP(S) traffic to your applications. The default Workflow charts provision a Kubernetes service in the deis namespace with a service type of LoadBalancer.

Depending on your Kubernetes configuration, this may provision a cloud-based load balancer automatically if that is supported by your cloud provider.

The router component uses Kubernetes annotations for both application discovery and router configuration.

Logger

The logging subsystem has two components:

- The fluentd component handles log shipping

- The logger component maintains a ring-buffer of application logs

The fluentd component is deployed to your Kubernetes cluster via daemon sets. fluentd subscribes to all container logs, decorates the output with Kubernetes metadata, and can be configured to drain logs to multiple destinations. By default, fluentd ships logs to the logger component, which powers deis logs.

The logger component receives log streams from fluentd, collating by application name. The logger does not persist logs to disk, instead maintaining an in-memory ring-buffer.

Such setup will allow the end user to configure multiple destinations such as Elasticsearch and other syslog compatible endpoints like Papertrail. See the docs on how to set up off-cluster logging.

Workflow Manager

The Workflow manager component will regularly check your Workflow cluster against the latest stable release of Deis Workflow components. If components are missing due to failure or are out of date, Workflow operators will be notified.

Workflow CLI

The Workflow CLI component provides the deis command line utility used to interact with the Workflow platform.

Running deis help will give you a up-to-date list of deis commands. To learn more about a command run deis help <command>.

In this post we gave an overview of the Deis Workflow architecture, including: platform services, Kubenetes native functionality, application layout, edge routing, and topologies. We also took a look at how Workflow is composed of many independent components.

In the next and final post in this series, we’ll install Workflow.